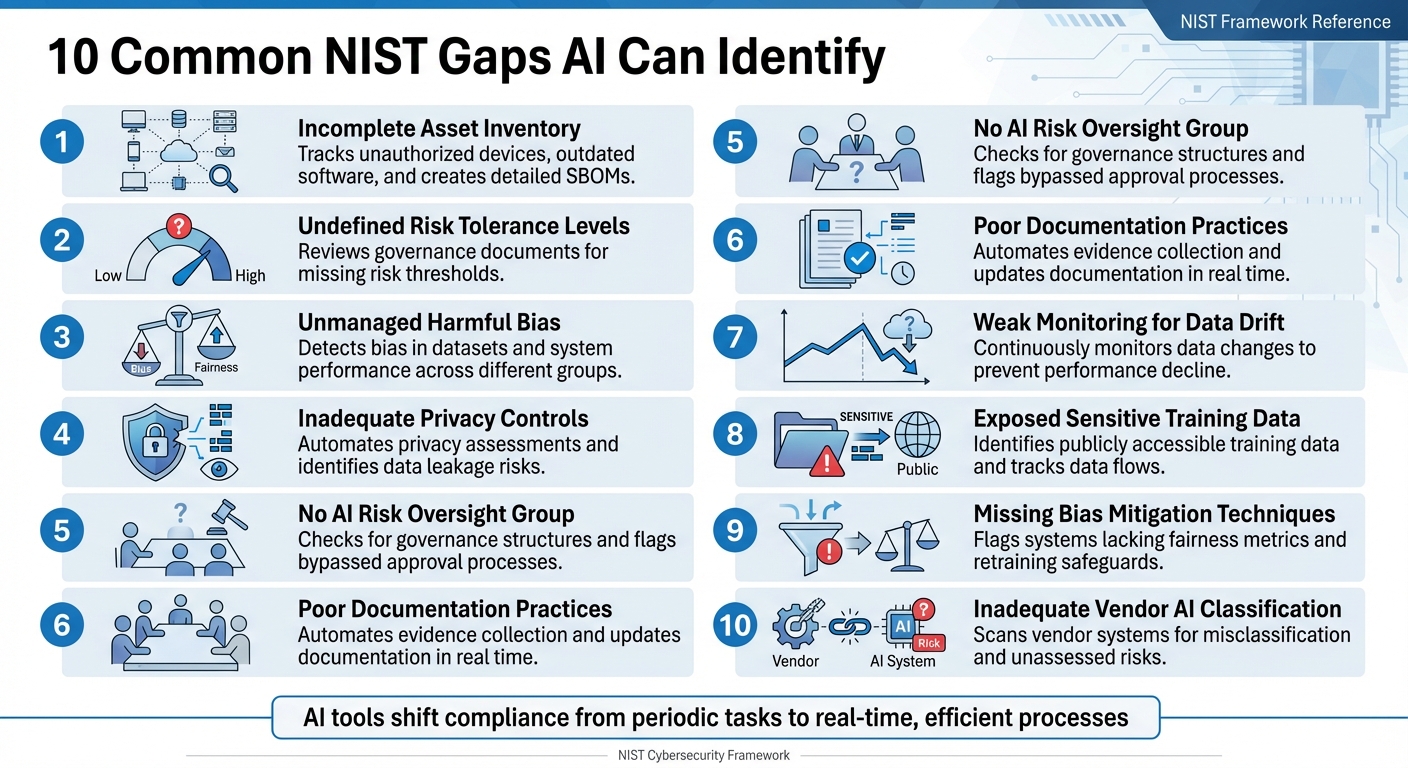

Organizations often struggle with achieving full compliance with the NIST Cybersecurity Framework (CSF), especially with its latest version, CSF 2.0. Manual audits are slow and error-prone, leaving gaps in areas like asset tracking, risk management, and privacy controls. AI-driven tools change the game by automating compliance checks, providing continuous monitoring, and identifying gaps in real time. Here are 10 common NIST compliance gaps AI can address:

- Incomplete Asset Inventory: AI tracks unauthorized devices, outdated software, and creates detailed Software Bills of Materials (SBOMs).

- Undefined Risk Tolerance Levels: AI reviews governance documents for missing risk thresholds.

- Unmanaged Bias: AI detects bias in datasets and system performance across different groups.

- Inadequate Privacy Controls: AI automates privacy assessments and identifies risks like data leakage.

- No AI Risk Oversight Group: AI checks for governance structures and flags bypassed approval processes.

- Poor Documentation Practices: AI automates evidence collection and updates documentation in real time.

- Weak Monitoring for Data Drift: AI tools continuously monitor data changes to prevent performance decline.

- Exposed Sensitive Training Data: AI identifies risks like publicly accessible training data and tracks data flows.

- Missing Bias Mitigation Techniques: AI flags systems lacking fairness metrics and retraining safeguards.

- Inadequate Vendor AI Classification: AI scans vendor systems for misclassification and unassessed risks.

AI tools like ISMS Copilot streamline compliance by automating gap analysis, aligning controls with NIST standards, and generating audit-ready documentation. This shifts compliance from a periodic task to a real-time, efficient process.

10 Common NIST Compliance Gaps AI Can Identify and Address

GRC - Gap Analysis 101 using NIST RMF and CIS Controls Compliance

1. Incomplete Asset Inventory

Having a thorough asset inventory is crucial for meeting NIST compliance requirements. Yet, many organizations struggle to keep track of every device, application, and software component they use. When assets go untracked, they create vulnerabilities that attackers can exploit.

A NIST 800-53 Copilot can help by comparing the actual state of your network to its desired state. Continuous scanning highlights any deviations from your approved asset list, making it easier to spot issues like unauthorized devices or outdated software that lack critical security patches. This ongoing process not only strengthens risk management but also provides valuable insights into your network's security.

"The focus of the SWAM capability is to manage risk created by unmanaged or unauthorized software on a network. Unmanaged or unauthorized software is a target that attackers can use as a platform from which to attack components on the network." – NIST IR 8011 Vol. 3

Beyond scanning, CDM dashboards offer real-time visibility into your asset inventory, moving away from outdated, static audits to continuous monitoring. AI systems can also automatically create a detailed Software Bill of Materials (SBOM) for every software build, cataloging every component and dependency - whether direct or indirect. This functionality is increasingly important as nearly 220,000 U.S. organizations must comply with CMMC standards, with 80,000 of them subject to Level 2 controls that align with NIST SP 800-171.

To improve your asset inventory, consider using Software Identification (SWID) tags to automate the discovery of software across your network. You can also implement automated whitelisting to ensure that only authorized software is allowed to run. For AI systems, it’s essential to inventory not just hardware but also the third-party integrations they rely on, as these external components can introduce additional risks.

2. Undefined Risk Tolerance Levels

Many organizations recognize the risks associated with AI but often fail to establish clear thresholds for what is acceptable. Without these defined boundaries, teams can find it challenging to determine when to move forward or hit pause on a project. This is where AI tools come in handy. Using natural language processing, cross-framework ISMS assistants can review policies, risk management strategies, and governance frameworks to confirm whether specific criteria - like quantitative limits or qualitative boundaries - have been set for different AI applications. For example, systems that handle sensitive data, such as personally identifiable information (PII), require stricter thresholds to ensure safety and compliance. When these safeguards are missing, immediate corrective action becomes necessary, as emphasized by NIST.

"To the extent that challenges for specifying AI risk tolerances remain unresolved, there may be contexts where a risk management framework is not yet readily applicable for mitigating negative AI risks." - NIST AI RMF 1.0

NIST defines risk tolerance as "the organization's or AI actor's readiness to bear the risk to achieve its objectives". AI tools play a crucial role in scanning governance documents to check for clearly documented risk acceptance criteria, whether they involve numerical limits or broader qualitative boundaries. However, the NIST AI Risk Management Framework, introduced on January 26, 2023, does not mandate specific tolerance levels. Instead, it highlights the importance of identifying gaps - like missing documentation on residual risks or vague risk management strategies - that could leave organizations exposed.

NIST offers a clear directive for such scenarios:

"In cases where an AI system presents unacceptable negative risk levels... development and deployment should cease in a safe manner until risks can be sufficiently managed." - NIST AI RMF 1.0

3. Unmanaged Harmful Bias

Bias in AI systems stems from the interplay of code, training data, and the broader social context. Without proper compliance policies, these systems can unintentionally worsen existing inequalities or even create new patterns of discrimination. This has real-world consequences in areas like healthcare, hiring, and finance. The issue is compounded by the complexity of AI systems, which often involve countless decision points, making it nearly impossible to manually identify and address biases. Organizations often turn to an AI compliance assistant to manage these complex, multi-step workflows.

"Without proper controls, AI systems can amplify, perpetuate, or exacerbate inequitable or undesirable outcomes for individuals and communities." - NIST AI RMF 1.0

AI tools tackle harmful bias through processes like Test, Evaluation, Verification, and Validation (TEVV), as outlined in the NIST AI RMF's MEASURE function. These processes assess whether training datasets align with their intended purpose and reflect current societal conditions. Automated monitoring plays a key role here, identifying "data drift", which refers to changes in input data that can impact system performance and introduce new biases that may not have been present during initial testing.

This detection process goes beyond crunching numbers. AI tools evaluate how systems perform across various sub-groups, recognizing that even people who don’t directly use the system can be affected. This approach, often called socio-technical evaluation, considers not only the technical design but also how it interacts with societal norms and human behavior to produce inequities. To ensure objectivity, NIST advocates for a clear division of responsibilities - teams building AI models should be separate from those verifying and validating them. This setup allows for more impartial assessments and continuous monitoring for bias across all user groups.

Another key strategy to manage bias is enhanced data tracking. For example, organizations using AI-native visibility tools reported a staggering 1,660% increase in their ability to monitor data processing activities within just three weeks of implementation. These tools map "Data Journeys", tracing how data flows from its source through AI models. By doing so, they can predict and prevent potential violations in real time. This is critical since AI processes data at speeds far beyond what manual teams can handle. Tracking these data flows is essential to catching shifts that might lead to new or worsening biases before they cause harm.

4. Inadequate Privacy Controls

The rapid pace and scale of AI systems make privacy gaps a significant concern, especially since manual oversight struggles to keep up. Without proper privacy safeguards, organizations risk exposing personally identifiable information (PII) through training data leaks, unauthorized access, or unintended disclosures in model outputs. The reliance on APIs for data ingestion and inference further increases the attack surface, making robust privacy controls a necessity.

To address these challenges, AI tools, including specialized compliance assistants, now automate privacy control assessments using machine-readable formats like OSCAL. These tools continuously evaluate controls outlined in frameworks such as NIST SP 800-53, utilizing formats like JSON, XML, and YAML. Notably, on August 27, 2025, NIST released version 5.2.0 of SP 800-53, which fully integrated privacy controls into the broader security control catalog, moving away from the earlier standalone approach.

"Addressing functionality and assurance helps to ensure that information technology products and the systems that rely on those products are sufficiently trustworthy." - Joint Task Force, NIST SP 800-53 Rev. 5

Automated tools focus on the PII Processing and Transparency (PT) control family to identify unauthorized PII use, disclosures, or de-anonymization risks. They also uncover shadow APIs and shadow AI that manual audits often overlook. By implementing "Policy-as-Code", organizations can convert the complex requirements of NIST SP 800-53 into real-time operational rules. This process is streamlined when organizations train their AI compliance assistants on high-accuracy models designed for GRC. These rules automatically redact sensitive data from AI prompts and outputs before they are processed by the model.

Additionally, NIST's Dioptra software supports red-teaming exercises, helping organizations test privacy and security controls under adversarial conditions. These simulations can measure performance losses and identify specific vulnerabilities. This is especially important given that the NIST AI RMF Generative AI Profile outlines 12 unique or heightened risks associated with AI, including data privacy concerns like leakage and unauthorized de-anonymization. On April 14, 2025, NIST further emphasized these issues by releasing the draft Privacy Framework 1.1, which specifically addresses AI-related privacy risks such as inference attacks and bias amplification.

5. No AI Risk Oversight Group

One major challenge in AI risk management is the absence of a dedicated oversight group. Without such a committee, governance and accountability often fall through the cracks, leading to fragmented and lower-priority efforts. Today, advanced tools leveraging Natural Language Processing (NLP) can help address this gap by analyzing organizational policies, charters, and meeting minutes. These tools can confirm whether a formal AI risk oversight group exists and ensure alignment with the NIST AI RMF "Govern" function - specifically Govern 1.2, which emphasizes the need for clear governance and accountability structures.

AI-driven systems also examine organizational charts and identity management systems to identify formal oversight roles within AI risk management. Governance platforms add another layer of protection by flagging projects that bypass formal approval processes. This ensures continuous oversight, a critical component of adhering to the NIST RMF. The "Govern" function acts as a foundation, influencing all other RMF functions such as Map, Measure, and Manage. This interconnected approach strengthens the overall framework.

"Without defined accountability, AI risk management efforts can become fragmented or deprioritized, leaving the organization exposed." - Kezia Farnham, Senior Manager, Diligent

The urgency for proper oversight is underscored by the rapid growth in AI investment and regulation. In 2024, U.S. private sector investment in AI exceeded $100 billion, while mentions of AI in global legislation rose 21.3% across 75 countries since 2023 - a staggering ninefold increase since 2016. Without formal oversight mechanisms, organizations risk falling out of compliance with emerging regulations, such as the EU AI Act and U.S. Executive Order 14110, which stress the importance of human oversight and auditable risk management.

To bridge this gap, organizations should form a cross-functional AI risk committee that includes key leaders like the General Counsel, Chief Information Security Officer (CISO), Chief Risk Officer, and AI/ML leads. Assigning responsibility for the AI RMF to a single executive - often the Head of Risk or Legal - can further centralize accountability. Additionally, adopting a purpose-built AI compliance assistant can streamline this process, automating the mapping of roles and responsibilities and replacing outdated manual spreadsheets with continuous monitoring systems.

6. Poor Documentation Practices

Building on earlier issues with asset tracking and oversight, poor documentation further undermines compliance efforts. Documentation gaps are both widespread and costly when it comes to NIST compliance. A striking 86% of organizations lack visibility into their AI data flows, leading to significant documentation and audit gaps that can spell trouble during regulatory reviews. For instance, when auditors request logs of AI interactions involving personal data, many organizations are unable to provide them. This failure violates key regulations such as GDPR Article 30, CCPA Section 1798.130, and HIPAA § 164.312.

AI-powered compliance platforms offer a practical solution by replacing outdated manual spreadsheets with real-time dashboards. These dashboards automatically flag missing evidence and documentation issues. They also use continuous control monitoring to detect "drift" - instances where current documentation or policies no longer match the actual state of systems or infrastructure. Automated tools capture real-time configurations and logs, creating comprehensive audit trails.

"Treating every change, deployment, and incident as a documentation event ensures a steady accumulation of evidence that is far more reliable than a rushed post-incident effort." - Abnormal AI

The principle of "document as you go" strengthens compliance by capturing evidence in real time. By integrating with tools like version-controlled wikis and ticketing systems, AI ensures that approvals and screenshots are attached to pull requests. This is especially critical given that only 17% of organizations have implemented automated AI security controls capable of providing the logs required for regulatory audits.

Organizations leveraging automated compliance platforms see major benefits, including reducing review cycles from weeks to mere hours and significantly lowering compliance workloads. These tools also map an organization’s "Current Profile" against a NIST "Target Profile", pinpointing gaps in safeguards and documentation. By automating the "Identify–Assess–Categorize" process, they help ensure that asset inventories and risk classifications remain up to date. This continuous approach to documentation plays a key role in maintaining NIST compliance.

sbb-itb-4566332

7. Weak Monitoring for Data Drift

Data drift happens when the data an AI system was trained on changes over time, leading to a decline in performance and reliability. With over 75% of organizations now using AI in at least one business function, failing to monitor these shifts creates a serious compliance risk - one that AI-powered tools are well-equipped to address. Tackling this issue requires more advanced monitoring solutions, as outlined below.

In practice, AI often functions as a "black box", making it harder to detect when something goes wrong. 45% of organizations cite concerns about data accuracy or bias as a major obstacle to adopting AI. Yet, many still lack the tools for continuous monitoring, leaving them vulnerable to data drift. Unlike manual checks, continuous monitoring enables real-time analysis, catching issues before they escalate.

"AI systems... may be trained on data that can change over time, sometimes significantly and unexpectedly, affecting system functionality and trustworthiness in ways that are hard to understand".

To address data drift, advanced AI-SPM tools can automatically detect deployed AI models, identify "Shadow AI", and map both training and inference datasets, including document stores and vector databases. These tools also flag misconfigurations, such as publicly accessible training data, which could pose security risks.

For example, Net Solutions recently worked with a North American commercial cleaning company to implement AI governance for a sales chat assistant. By using real-time monitoring tools like AWS CloudWatch and DataDog, along with automated pipelines integrated with Zoho Desk alerts, the company transitioned from a proof-of-concept to a compliant, scalable AI solution in just six weeks.

Technologist Akash Lomas from Net Solutions emphasizes the importance of monitoring:

"Measurement becomes especially critical in environments where AI directly shapes customer experiences... without continuous oversight, these systems can drift - serving irrelevant, biased, or misleading content".

Ignoring data drift can lead to major consequences: reduced system performance, regulatory fines, and loss of trust. On top of that, cyberattacks cost businesses an average of $4.45 million annually.

8. Exposed Sensitive Training Data

The risk of exposing sensitive training data is a serious concern, especially when it comes to compliance violations. According to NIST, Generative AI introduces 12 distinct risks, with data privacy issues - like leakage and unauthorized disclosure of personal information - being a major focus. Many organizations face challenges in keeping track of where their AI training data resides and ensuring its security.

To tackle this, AI-Security Posture Management (AI-SPM) tools have become essential. These tools can automatically identify deployed AI models and locate the datasets used for training across cloud platforms, including document storage and vector databases. They also flag vulnerabilities, such as publicly accessible training data or open application endpoints. Mahesh Nawale, Product Marketing Manager at Zscaler, emphasizes the importance of these tools:

"Zscaler AI-SPM automatically locates datasets used in AI training and inference across your cloud environment - including data, document stores and vector databases. It flags misconfigurations like publicly accessible training data, giving your security teams the insight needed to investigate, remediate, and prove compliance".

AI tools go beyond just identifying misconfigurations. They also monitor model behavior to catch potential threats. For instance, they can detect issues like data memorization, model inversion, or suspicious activities such as large data downloads or unauthorized changes, which might signal data exfiltration. Automated scanning capabilities further strengthen security by identifying sensitive information - like biometric, health, or location data - that hasn't been properly anonymized.

NIST has also developed "Dioptra", a software solution that helps organizations test AI models against cyberattacks. It measures how attacks impact model performance and identifies scenarios where training data could be at risk.

Katerina Megas, Program Manager at NIST, highlights the growing challenge:

"AI creates new re-identification risks, not only because of its analytic power across disparate datasets, but also because of potential data leakage from model training".

With the enormous datasets used in training Large Language Models, continuous automated testing is no longer optional - it's a necessity to protect sensitive information.

9. Missing Bias Mitigation Techniques

When AI systems lack proper methods to address bias, they risk amplifying unfair outcomes and creating significant compliance issues. According to NIST, managing harmful bias is one of the seven critical traits of a trustworthy AI system. Traditional risk management frameworks fall short in handling AI bias, making specialized mitigation strategies essential. While earlier sections covered detecting bias, tackling it effectively requires clear controls and ongoing retraining efforts. Simply identifying bias isn’t enough - long-term strategies are needed to counteract it.

AI tools play a key role in spotting gaps in bias controls. They flag issues like missing fairness metrics (e.g., disparate impact ratios or false positive rate differences), systems that lack adversarial testing or AI red teaming, and models without scheduled retraining, which leaves them vulnerable to data drift.

"Without proper controls, AI systems can amplify, perpetuate, or exacerbate inequitable or undesirable outcomes for individuals and communities." – NIST AI RMF 1.0

To address these challenges, AI governance platforms conduct automated scans to uncover compliance gaps based on the NIST AI RMF guidelines. They ensure organizations have implemented necessary safeguards, such as content filters, bias correction protocols, and other guardrails mandated by standards like NIST AI 600-1. These platforms also trace data lineage to confirm that training datasets are free from issues that could lead to biased results. Additionally, AI-Security Posture Management (AI-SPM) tools identify all deployed AI models, including "shadow AI" systems that may have bypassed fairness testing altogether.

With AI systems making billions of decisions, undetected biases can erode public trust, infringe on civil liberties, and trigger regulatory penalties. Notably, there has been a 21.3% global rise in mentions of AI legislation since 2023. These risks highlight the urgent need for continuous, automated oversight to ensure fairness and accountability.

10. Inadequate Vendor AI Classification

When organizations don't properly categorize third-party AI systems, they leave themselves open to compliance risks they might not even see coming. The problem? Vendor risk metrics often don’t align with a company’s internal risk frameworks. If vendors are misclassified, it’s impossible to apply the right controls or determine whether their "low-risk" label actually fits your organization’s risk tolerance. This mismatch highlights the need for automated tools that can verify vendor classifications in real time.

AI-powered tools step in here, automating the process of identifying classification gaps. By scanning vendor contracts and technical specs, these tools pinpoint misclassified systems and flag unassessed third-party AI components, as outlined in NIST AI RMF functions Govern 6.1 and Map 4.1. They also catch systems that lack transparency about critical elements like pre-trained models, data pipelines, or development processes.

"Risk can emerge both from third-party data, software or hardware itself and how it is used." – NIST AI RMF 1.0

The NIST Cybersecurity Framework Profile for AI, developed with input from over 6,500 contributors, stresses the importance of supply chain visibility. AI-driven tools help by maintaining real-time inventories of third-party systems, mapping the AI supply chain to uncover unclassified or unvetted components. These tools expose vulnerabilities hiding in software, hardware, or data sources that might only become apparent during deployment. With automated vendor classification, organizations can gain a comprehensive view of their AI risk landscape, in line with NIST’s guidelines.

Another benefit of automation is its ability to prioritize vendor assessments based on data sensitivity. For example, systems that handle personally identifiable information or interact directly with users are flagged for closer scrutiny. This approach acknowledges that a system deemed low-risk in one context - like processing physical sensor data - might pose a high risk in another, such as managing protected health information. Without this automated oversight, companies risk wasting resources on low-risk systems while leaving high-stakes AI tools under-monitored.

How ISMS Copilot Identifies These Gaps

ISMS Copilot takes a framework-specific approach to pinpoint gaps in NIST 800-53 compliance. Instead of scouring the internet for answers, it uses Retrieval-Augmented Generation (RAG) to pull targeted information from a carefully curated dataset. This dataset includes over 30 frameworks like NIST 800-53, ISO 27001, SOC 2, and GDPR, providing precise and actionable guidance to address compliance gaps.

The tool simplifies gap analysis by mapping controls across multiple standards, creating auditor-ready documentation, and breaking down lengthy reports. With ISMS Copilot One, users can upload compliance documents - even those exceeding 20 pages - for automated analysis. Currently, more than 600 consultants rely on this tool for their information security compliance needs. Here's a quick comparison of ISMS Copilot's specialized features versus general-purpose AI tools:

| Feature | ISMS Copilot | General-Purpose AI |

|---|---|---|

| Dataset | Focused on information security compliance | Broad, general knowledge base |

| Accuracy | Delivers framework-specific guidance | Often vague or overly generic |

| Audit Readiness | Creates auditor-ready documentation | Not tailored for formal audits |

| Data Privacy | SOC 2 Type II-compliant; no user data training | May use user inputs for training |

| Document Analysis | Handles detailed gap analysis for large reports | Limited to basic text processing |

To safeguard client information, ISMS Copilot provides dedicated Workspaces that keep projects isolated and secure. All data is stored in SOC 2 Type II-compliant datastores with end-to-end encryption, ensuring that uploaded documents or conversations are never used to train its AI models.

For organizations tackling NIST 800-53 gap analyses, ISMS Copilot offers clear, actionable steps to implement controls and meet FISMA requirements. You can explore the free tier at chat.ismscopilot.com, with paid plans starting at $20/month.

Conclusion

AI has shifted NIST compliance from being a periodic, reactive process to a proactive, real-time system. By analyzing entire datasets, AI can identify potential issues before they grow into larger problems. With AI-driven automation, organizations can stay audit-ready by automating evidence collection while keeping up with changing compliance requirements.

Tools like policy-as-code and continuous assurance make it possible to automate NIST controls, addressing issues early in the development process. AI-powered tools can quickly spot anomalies across various environments, ensuring centralized oversight across multiple compliance frameworks. These capabilities are at the core of the solutions offered by ISMS Copilot.

ISMS Copilot provides continuous compliance through its RAG (Red-Amber-Green) approach, leveraging a curated dataset that spans frameworks like NIST 800-53, ISO 27001, SOC 2, and beyond. Its features include automated gap analysis, cross-framework mapping, and audit-ready documentation, ensuring organizations remain aligned with evolving NIST standards.

On top of these benefits, ISMS Copilot supports multiple frameworks, a critical need for today’s complex security programs. Organizations can explore these AI-powered compliance tools for free by visiting chat.ismscopilot.com.

FAQs

How does AI simplify asset inventory management for NIST compliance?

Maintaining a precise and up-to-date asset inventory is a critical component of the NIST Cybersecurity Framework (e.g., ID.AM-1) and NIST 800-53 controls. AI simplifies this task by automating the discovery, classification, and tracking of both hardware and software assets. Instead of relying on traditional, often error-prone manual methods, AI leverages advanced techniques like machine learning to analyze network traffic, endpoint activity, and cloud logs. This approach ensures that unidentified or unauthorized assets are detected, discrepancies are highlighted, and the inventory remains continuously updated.

AI-driven tools such as ISMS Copilot elevate this process by seamlessly integrating with scanners, CMDBs, and cloud APIs. They can auto-populate NIST-compliant inventory tables and generate essential documentation, including asset reports and change logs. With its natural-language interface, ISMS Copilot makes it easy for users to ask questions like, "Which devices are missing firmware updates?" and receive immediate, actionable insights. This not only reduces manual workload but also enhances accuracy, keeping your asset inventory in perfect sync with NIST standards.

How does AI help identify and reduce bias in AI systems?

AI plays an important role in spotting and fixing bias within artificial intelligence systems. The NIST AI Risk Management Framework highlights fairness as one of the key principles of trustworthy AI, alongside safety, transparency, and privacy. Using AI tools, organizations can sift through large datasets, predictions, and metadata to uncover patterns of unequal treatment - like higher error rates that disproportionately affect certain demographic groups. These tools also apply statistical fairness metrics and offer actionable insights to address the identified issues.

AI-driven assistants, such as ISMS Copilot, take this a step further by actively monitoring AI systems for compliance with NIST controls. They can pinpoint missing documentation, flag untested data sources, or identify gaps in bias testing processes. By offering tailored templates and step-by-step guidance, these tools transform compliance from a static checklist into an interactive workflow. This makes it easier for organizations to address bias, ensuring their AI systems are not only compliant but also fairer and more reliable.

How can AI help automate privacy control assessments under NIST standards?

AI takes the hassle out of privacy control assessments by analyzing the NIST Privacy Framework and SP 800-53 privacy-related controls. It then aligns these controls with your organization’s data flows, systems, and policies. By scanning configuration files, API logs, and documentation, it builds a real-time map linking controls to assets, pinpointing issues like missing encryption or outdated access controls automatically.

ISMS Copilot, often referred to as “the ChatGPT of ISO 27001,” brings this functionality to NIST standards. For example, you can ask it to evaluate a specific control, such as NIST 800-53 Privacy Control AU-3. In response, it generates tailored checklists, steps for gathering evidence, and remediation plans. This eliminates tedious manual work, offering instant, precise, and actionable insights to streamline compliance efforts.