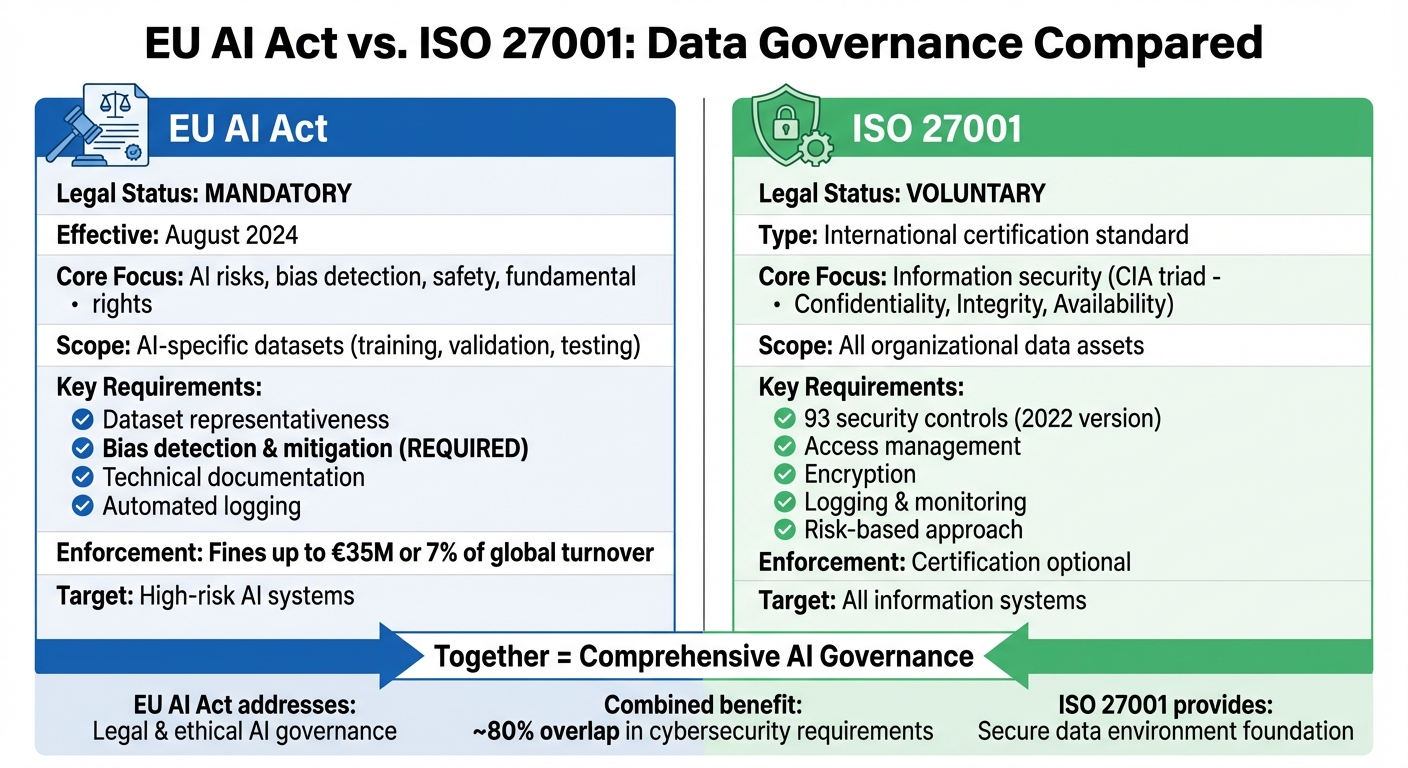

La Ley de IA de la UE y la norma ISO 27001 abordan la gobernanza de datos de manera diferente, pero pueden trabajar conjuntamente para gestionar los sistemas de IA de manera eficaz.

- Ley de IA de la UE: una normativa obligatoria (que entrará en vigor en agosto de 2024) centrada en los riesgos de los sistemas de IA, la detección de sesgos y la calidad de los datos. Se imponen obligaciones como la representatividad de los conjuntos de datos y la mitigación de sesgos, especialmente para los sistemas de IA de alto riesgo.

- ISO 27001: Norma internacional voluntaria que garantiza la seguridad de los datos mediante un Sistema de Gestión de Seguridad de la Información (SGSI). Hace hincapié en la confidencialidad, integridad y disponibilidad de todos los activos de datos, no solo de los datos específicos de la IA.

Diferencia clave:

La Ley de IA de la UE aborda la gobernanza jurídica y ética de la IA, mientras que la norma ISO 27001 se centra en la seguridad de los entornos de datos. Juntas, proporcionan un enfoque estructurado para el cumplimiento y la seguridad de los sistemas de IA.

Comparación rápida:

| Característica | Ley de IA de la UE | ISO 27001 |

|---|---|---|

| Estatus jurídico | Obligatorio | Voluntario |

| Enfoque | Riesgos, sesgos y seguridad de la IA | Seguridad de los datos (CIA) |

| Ámbito | Conjuntos de datos específicos para IA | Todos los datos organizativos |

| Mitigación del sesgo | Obligatorio | Sin abordar |

| Aplicación | Multas de hasta 35 millones de euros o el 7 % | Certificación opcional |

Ley de IA de la UE frente a ISO 27001: diferencias clave en la gobernanza de datos

Explicación de la Ley de IA de la UE: cómo cumplir con la normativa en 2025 - Data Leaders Unscripted

Ley de IA de la UE: gobernanza de datos para sistemas de IA de alto riesgo

La Ley de IA de la UE adopta un enfoque personalizado para regular los sistemas de IA, con normas más estrictas para las aplicaciones de alto riesgo. El artículo 10, que entrará en vigor el 2 de agosto de 2026, describe las obligaciones específicas de estos sistemas, y el incumplimiento de las mismas conlleva sanciones.

Requisitos para los sistemas de IA de alto riesgo

Según el artículo 10, los conjuntos de datos utilizados para el entrenamiento, la validación y las pruebas deben cumplir normas estrictas. Deben ser pertinentes, representativos, lo más libres de errores posible y completos para el fin al que se destinan. Estos conjuntos de datos deben reflejar con precisión las poblaciones y los entornos en los que funcionará el sistema de IA, garantizando que tengan en cuenta todos los contextos aplicables.

Es fundamental abordar los sesgos. Se espera que los proveedores evalúen los conjuntos de datos en busca de sesgos que puedan comprometer la salud, la seguridad o los derechos fundamentales. Deben adoptarse medidas para detectar, prevenir y mitigar estos sesgos. Esto es especialmente importante en los casos en que los resultados de la IA podrían influir en las entradas futuras, creando potencialmente bucles de retroalimentación que refuercen los patrones discriminatorios. Para minimizar los riesgos, las organizaciones deben adoptar medidas de protección como la seudonimización y garantizar que los datos se eliminen después de realizar las correcciones.

La documentación es otro aspecto clave. Los proveedores deben realizar un seguimiento meticuloso del ciclo de vida de sus datos, abarcando todo, desde las decisiones de diseño y las fuentes de datos hasta procesos como el etiquetado, la limpieza y el enriquecimiento. Se les exige que identifiquen las lagunas en los datos, documenten las hipótesis y confirmen la idoneidad del conjunto de datos para el uso previsto. En el caso de los sistemas que no implican el entrenamiento de modelos, estas normas se aplican únicamente a los conjuntos de datos de prueba.

Marcos de gobernanza y aplicación

Para garantizar el cumplimiento de estas normas de gobernanza de datos, la Ley de IA de la UE establece mecanismos formales de aplicación. A diferencia de las directrices voluntarias, la Ley impone obligaciones vinculantes a cualquier organización que introduzca sistemas de IA de alto riesgo en el mercado de la UE. La supervisión se lleva a cabo tanto a nivel de la Unión —por parte de entidades como la Oficina de IA y el Comité Europeo de Inteligencia Artificial — como a nivel nacional, a través de las autoridades nacionales competentes designadas. Estas autoridades tienen la facultad de solicitar documentación técnica, evaluar los sistemas y aplicar medidas correctivas en caso de incumplimiento.

Las organizaciones están obligadas a implementar marcos de gobernanza estructurados que abarquen todas las etapas de sus canales de datos. Esto incluye protocolos estandarizados para la preparación de datos, auditorías periódicas de sesgos y planes de supervisión posteriores a la comercialización para evaluar el rendimiento del sistema tras su implementación. Ciertas prácticas, como el rastreo indiscriminado de imágenes faciales en Internet para crear bases de datos de reconocimiento facial, están expresamente prohibidas por la ley. Con la proximidad de los plazos de aplicación, considerar el artículo 10 como una simple lista de verificación podría acarrear graves consecuencias.

ISO 27001: Control de la seguridad y la gobernanza de los datos

La norma ISO 27001 desempeña un papel fundamental junto con la Ley de IA de la UE al centrarse en la protección de datos. Su fundamento se basa en la tríada CIA: confidencialidad (garantizar que solo los usuarios autorizados puedan acceder a los datos), integridad (mantener la exactitud y la integridad de los datos) y disponibilidad (hacer que los datos sean accesibles cuando sea necesario). Este marco tecnológicamente neutro se aplica de forma universal, tanto si se trata de proteger registros de clientes, datos financieros o conjuntos de datos para el entrenamiento de la IA. Al centrarse en prácticas sólidas de seguridad de los datos, la norma ISO 27001 proporciona una base sólida para gestionar los flujos de trabajo de datos específicos de la IA.

Requisitos básicos de gobernanza de datos

La actualización de 2022 de la norma ISO 27001 organiza sus 93 controles de seguridad en cuatro categorías principales: organizativos, humanos, físicos y tecnológicos. Estos controles abarcan áreas críticas como el acceso, el cifrado, la supervisión y los riesgos de terceros. Algunos aspectos destacados son:

- Gestión de accesos (Anexo A.9): Garantiza que solo las personas autorizadas puedan ver, modificar o eliminar datos.

- Criptografía (Anexo A.10): Protege los datos confidenciales mediante cifrado, tanto en reposo como durante la transmisión.

- Registro y supervisión (Anexo A.12): Realiza un seguimiento del acceso y las acciones mediante registros de auditoría.

- Seguridad de los proveedores (Anexo A.15): Mitiga los riesgos asociados con el manejo de datos por parte de proveedores externos.

A diferencia de la Ley de IA de la UE, que establece normas específicas para los sistemas de IA de alto riesgo, la norma ISO 27001 hace hincapié en un enfoque basado en el riesgo. Las organizaciones identifican las amenazas potenciales para sus datos y aplican controles personalizados. Una encuesta de Gartner de 2024 reveló que las empresas que utilizan plataformas de cumplimiento automatizadas redujeron sus ciclos de auditoría en un 39 %. Este cambio hacia el «cumplimiento vivo», como lo describe Mark Sharron de ISMS.online, se centra en la recopilación de pruebas en tiempo real en lugar de en la documentación estática. Estos controles no solo mejoran la seguridad de los datos, sino que también agilizan su integración en los sistemas de IA.

Aplicabilidad a los flujos de datos de IA

El marco de la norma ISO 27001 se adapta de forma natural a los sistemas de IA. Sus controles de gestión de activos (Anexo A.8) exigen a las organizaciones que inventaríen sus activos de información, los clasifiquen según su sensibilidad y definan los procedimientos de manejo adecuados. En entornos de IA, esto incluye catalogar conjuntos de datos de entrenamiento, conjuntos de validación y pesos de modelos junto con los activos de datos tradicionales.

Las tareas específicas de preparación de datos, como la limpieza, el etiquetado y el enriquecimiento, se incluyen en el apartado «Manejo de activos» (A.8.2.3). Las transferencias seguras de conjuntos de datos entre entornos se rigen por controles como la «Transferencia física de medios» (A.8.3.3) y las medidas de seguridad de las comunicaciones. Por su parte, los controles de «Seguridad de las operaciones» (anexo A.12) y «Desarrollo de sistemas » (anexo A.14) garantizan el procesamiento seguro de los datos, la gestión eficaz de los cambios y la integridad general de los procesos de IA.

Como explica Pansy, de Sprinto:

«La norma ISO 27001 protege el sistema, y la norma ISO 42001 rige las decisiones».

Esta distinción es crucial. Mientras que la norma ISO 27001 se centra en proteger el flujo de datos, marcos normativos como la Ley de IA de la UE abordan cuestiones más amplias, como la equidad y la explicabilidad de los resultados de la IA. Juntos, forman un enfoque complementario para gestionar los sistemas de IA de forma eficaz.

sbb-itb-4566332

Comparación entre la Ley de IA de la UE y la norma ISO 27001: gobernanza de datos

Esta sección profundiza en cómo la Ley de IA de la UE y la norma ISO 27001 abordan la gobernanza de datos a lo largo del ciclo de vida de la IA, destacando sus diferencias y similitudes.

Comparación de características

La Ley de IA de la UE y la norma ISO 27001 toman caminos diferentes en lo que respecta a la gobernanza de datos. La Ley de IA de la UE es una normativa obligatoria, cuyo incumplimiento en prácticas prohibidas puede acarrear multas de hasta 35 000 000 € o el 7 % de la facturación anual global. Por otro lado, la norma ISO 27001 es una certificación voluntaria que las organizaciones adoptan para demostrar su compromiso con la seguridad.

La Ley de IA de la UE da prioridad a la seguridad, los derechos fundamentales y la prevención de sesgos, especialmente en el caso de los sistemas de IA de alto riesgo. Sin embargo, la norma ISO 27001 se centra en la protección de todos los activos de información a través de la tríada CIA: confidencialidad, integridad y disponibilidad . Mientras que la Ley de IA de la UE hace hincapié en la importancia de contar con conjuntos de datos de entrenamiento representativos y sin errores, la norma ISO 27001 se centra más en la seguridad del entorno de datos en general.

| Característica | Ley de IA de la UE (IA de alto riesgo) | ISO 27001 |

|---|---|---|

| Estatus jurídico | Obligatorio | Voluntario |

| Enfoque principal | Seguridad, derechos fundamentales, sesgos | Seguridad de la información (CIA) |

| Cobertura de datos | Conjuntos de entrenamiento, validación y prueba | Todos los activos de información |

| Requisito de sesgo | Detección y mitigación obligatorias | No se aborda explícitamente. |

| Controles de seguridad | Robustez de la IA y ciberseguridad | 93 controles (versión 2022) |

| Documentación | Documentación técnica y evaluación de la conformidad | Manual del SGSI, Declaración de aplicabilidad |

El siguiente paso es ver cómo estos marcos se alinean con las etapas del ciclo de vida de los datos de IA.

Etapas del ciclo de vida de los datos de IA

Cuando se compara con el ciclo de vida de los datos de IA, las diferencias entre la Ley de IA de la UE y la norma ISO 27001 se hacen aún más evidentes. Por ejemplo, el artículo 10 de la Ley de IA de la UE exige una transparencia clara sobre el origen y la finalidad de los datos durante su obtención. La norma ISO 27001 aborda esta cuestión a través del inventario de activos y los controles de los proveedores. Sin embargo, la norma ISO 27001 no aborda la mitigación de los sesgos, lo que obliga a las organizaciones a crear flujos de trabajo independientes para la gobernanza de la IA.

| Etapa del ciclo de vida | Obligaciones de la Ley de IA de la UE (art. 10) | Controles ISO 27001 (Anexo A) |

|---|---|---|

| Abastecimiento | Origen de los datos, finalidad original de la recogida | Inventario de activos, relaciones con proveedores |

| Etiquetado/Preparación | Anotación, etiquetado, limpieza, agregación | Clasificación de la información, enmascaramiento de datos |

| Formación/Validación | Evaluación de la representatividad, identificación de lagunas en los datos | Entorno de desarrollo seguro, gestión del cambio |

| Mitigación del sesgo | Detección y corrección de la discriminación prohibida | No aplicable (requiere una gobernanza de IA independiente) |

| Retención | Eliminación de datos especiales tras la corrección del sesgo | Conservación y eliminación de activos de información |

Superposición y diferencias

Aunque ambos marcos comparten algunos puntos en común, tienen fines distintos. Por ejemplo, ambos requieren registros y controles de acceso, pero sus objetivos difieren. La Ley de IA de la UE exige «registros generados automáticamente» para rastrear las decisiones de la IA hasta sus fuentes de datos (artículo 12), mientras que la norma ISO 27001 utiliza el registro para la supervisión de la seguridad y la respuesta a incidentes. Las organizaciones con implementaciones de SGSI bien establecidas pueden cumplir ya hasta el 80 % de los requisitos de ciberseguridad de la Ley de IA de la UE, lo que les da una ventaja inicial.

La principal diferencia radica en la calidad de los datos frente a la seguridad de los datos. La Ley de IA de la UE permite el tratamiento de datos personales sensibles, como la raza, la religión y la salud, para la detección de sesgos. Por el contrario, la norma ISO 27001 aplica controles más generales para proteger los datos sensibles. Como explica acertadamente Gnanendra Reddy, auditor principal de la norma ISO/IEC 27001:

«La Ley de IA de la UE es el reglamento y la norma ISO/IEC 42001 es el sistema operativo que hace que el cumplimiento sea repetible y auditable».

Creación de un modelo integrado de gobernanza de datos

La Ley de IA de la UE describe el «qué», mientras que la norma ISO 27001 proporciona el «cómo» al establecer un marco operativo sólido. Juntas, crean una base perfecta para definir los requisitos y simplificar la implementación.

Correspondencia entre los requisitos de la Ley de IA de la UE y los controles de la norma ISO 27001

Para establecer un proceso claro y trazable, las organizaciones pueden alinear los requisitos de la Ley de IA de la UE con los controles de la norma ISO 27001. Por ejemplo, el mandato del artículo 10 de documentar el origen de los datos y la finalidad de su recogida (artículo 10[2b]) se combina con los controles de gestión de activos (A.8) de la norma ISO 27001, que ya hacen hincapié en el inventario de los activos de información. Del mismo modo, los requisitos de preparación, etiquetado y limpieza de datos se ajustan a la seguridad de las operaciones (A.12), lo que garantiza que el tratamiento y la transformación de los datos estén bien regulados.

| Requisito de la Ley de IA de la UE (art. 10) | Dominio de control relevante de la norma ISO 27001 | Acción operativa |

|---|---|---|

| Recopilación y origen de los datos (2b) | Gestión de activos (A.8) | Catalogar las fuentes de datos y documentar su finalidad. |

| Preparación/etiquetado de datos (2c) | Seguridad de las operaciones (A.12) | Utilice métodos controlados para la anotación y limpieza. |

| Detección y mitigación de sesgos (2f, 2g) | Evaluación de riesgos (A.12.6 / A.14.2) | Realizar pruebas técnicas y documentar las medidas de mitigación. |

| Documentación técnica (art. 11) | Documentación (A.5 / A.18) | Mantener tarjetas de modelo y registros de diseño con control de versiones. |

| Registro y mantenimiento de registros (art. 12) | Registro y supervisión (A.12.4) | Defina políticas de retención de registros basadas en el riesgo y controles de acceso. |

Al crear una matriz de requisitos de prueba que vincula los artículos de la Ley de IA de la UE con los controles ISO y las pruebas asociadas, el cumplimiento se convierte en un proceso estructurado y rastreable. Por ejemplo, los requisitos de registro suelen implicar períodos de retención que oscilan entre 180 y 365 días, lo que alinea ambos marcos.

Una vez establecidas estas alineaciones, la atención se centra en integrar estos controles en los flujos de trabajo cotidianos.

Implementación de la gobernanza de datos de IA

La combinación de estos marcos transforma el cumplimiento normativo de una simple lista de verificación en un sistema operativo coherente. Al basarse en su marco ISMS existente y aprovechar el ciclo PDCA (Planificar-Hacer-Verificar-Actuar), puede integrar controles específicos de IA sin tener que empezar desde cero. Esto podría incluir:

- Ampliación de los procesos de gestión de proveedores para incluir a los proveedores de datos de entrenamiento de IA.

- Implementación de un monitoreo continuo para detectar desviaciones en los modelos.

- Programar revisiones periódicas para identificar y abordar los sesgos.

El modelo de «tres líneas de defensa» funciona bien para la gobernanza de la IA. En esta configuración, los equipos operativos gestionan los riesgos durante el desarrollo (primera línea), los equipos jurídicos y de riesgos supervisan (segunda línea) y la auditoría interna garantiza controles independientes (tercera línea). Un inventario de modelos centralizado resulta esencial para el seguimiento de metadatos, como tipos de datos, algoritmos y contextos de implementación. Esto es especialmente importante, ya que un estudio de 2024 sobre 624 casos de uso de IA reveló que el 30 % de los modelos fueron desarrollados por terceros, y que algunas organizaciones no podían identificar los algoritmos utilizados.

Mantener un archivo de conformidad unificado es otro paso clave. Este archivo asigna cada requisito del sistema de IA a las políticas, pruebas y resultados de supervisión correspondientes, lo que garantiza que la documentación de cumplimiento esté centralizada y sea fácilmente accesible para las revisiones normativas.

Uso de ISMS Copilot para el cumplimiento integrado

ISMS Copilot simplifica el proceso de integración al actuar como un asistente basado en inteligencia artificial que conecta los requisitos legales con los controles ISO. Asigna automáticamente los requisitos de gobernanza de datos del artículo 10 de la Ley de IA de la UE a los controles ISO 27001, lo que elimina la necesidad de realizar referencias cruzadas manuales. La plataforma también ayuda a redactar documentos críticos, como políticas de ciclo de vida de los datos, registros de riesgos y archivos de conformidad, al tiempo que vincula cada obligación con las pruebas correspondientes.

Al automatizar el seguimiento de las pruebas y aprovechar el ciclo PDCA, ISMS Copilot convierte las auditorías en tareas de recuperación sencillas. También integra la gobernanza de datos de IA en operaciones comerciales repetibles. Las organizaciones pueden utilizar la herramienta para definir sus funciones en virtud de la Ley de IA de la UE (por ejemplo, como proveedores, implementadores o ambos), implementar el registro de ámbito y automatizar la documentación de detección de sesgos para cumplir con el artículo 10, garantizando que las categorías especiales de datos personales se procesen y eliminen de forma adecuada.

Con soporte para más de 30 marcos, incluidos ISO 27001, ISO 42001 y la Ley de IA de la UE, ISMS Copilot permite a las organizaciones gestionar un modelo de cumplimiento unificado en lugar de tener que lidiar con sistemas fragmentados. Esto es especialmente importante si se tiene en cuenta que, aunque el 96 % de las empresas ya utilizan la IA, solo el 5 % cuenta con marcos formales de gobernanza de la IA.

Conclusión

La Ley de IA de la UE y la norma ISO 27001 no son rivales, sino que trabajan conjuntamente. La Ley de IA describe lo que deben hacer las organizaciones para garantizar que los sistemas de IA sean seguros, transparentes y respeten los derechos fundamentales. Por su parte, la norma ISO 27001 proporciona un sistema de gestión de la seguridad de la información (SGSI) estructurado para ayudar a poner en práctica esos requisitos de manera eficaz.

La creciente adopción de estos marcos subraya la urgencia de una gobernanza integrada. Al combinar las fortalezas de ambos, las organizaciones pueden abordar los riesgos tradicionales de seguridad de la información, como las violaciones de datos y el acceso no autorizado, junto con cuestiones específicas de la IA, como el sesgo de los modelos y la transparencia en la toma de decisiones.

La integración no consiste solo en marcar casillas para cumplir con la normativa, sino que es una medida estratégica. Al mapear los requisitos de la Ley de IA de la UE con los controles de la norma ISO 27001 e integrarlos en los ciclos Planificar-Hacer-Verificar-Actuar existentes, se crea un sistema unificado. Este enfoque no solo simplifica las auditorías, sino que también posiciona a las empresas para cumplir con las normativas futuras, ya que los esfuerzos de estandarización global alinean cada vez más los requisitos de la UE con los marcos ISO/IEC.

Puntos clave

Una estrategia de cumplimiento unificada ofrece beneficios tangibles. A continuación, le indicamos cómo empezar:

Aproveche su SGSI existente.

Amplíe sus controles ISO 27001 para abordar retos específicos de la IA, como la detección de sesgos y la calidad de los conjuntos de datos. Con un solapamiento estimado del 80 % entre la norma ISO 27001 y otros marcos como SOC 2, estos sistemas se complementan de forma natural entre sí.

Centralice su inventario de IA.

Mantenga documentación detallada para cada sistema de IA, incluyendo tipos de datos, algoritmos, contextos de implementación y si usted actúa como proveedor o implementador.

Automatice la alineación de marcos.

Herramientas como ISMS Copilot pueden agilizar el cumplimiento normativo al mapear automáticamente los requisitos del artículo 10 de la Ley de IA de la UE con los controles de la norma ISO 27001. Estas herramientas también redactan archivos de conformidad y realizan un seguimiento de las pruebas en múltiples marcos, lo que ahorra tiempo y reduce los errores a medida que entran en vigor las obligaciones de la Ley de IA.

Abandone los métodos de gobernanza obsoletos.

Las políticas basadas en papel que no se revisan ni se miden de forma continua suelen suspender las auditorías. En su lugar, incorpore la gobernanza de la IA en las operaciones diarias mediante una supervisión continua, revisiones periódicas de sesgos y registros limitados (normalmente de 180 a 365 días) que se ajusten a ambos marcos.

Las organizaciones que tengan éxito en el marco de la Ley de IA de la UE irán más allá del cumplimiento básico. Integrarán los requisitos legales con las mejores prácticas operativas, utilizando la norma ISO 27001 como base para una gobernanza de la IA escalable y auditable. Con las herramientas y la mentalidad adecuadas, el cumplimiento normativo pasa de ser un obstáculo regulatorio a convertirse en una ventaja competitiva.

Preguntas frecuentes

¿Cómo colaboran la Ley de IA de la UE y la norma ISO 27001 para gestionar eficazmente los sistemas de IA?

La Ley de IA de la UE establece un marco jurídico para la IA, haciendo hincapié en las clasificaciones basadas en el riesgo, la transparencia, la rendición de cuentas y los requisitos de gobernanza de los datos (como los que se describen en el artículo 10). Estas medidas tienen por objeto garantizar que los sistemas de IA sigan siendo auditables y fiables. Por su parte, la norma ISO 27001 ofrece un enfoque estructurado a través de un Sistema de Gestión de la Seguridad de la Información (SGSI) para salvaguardar la confidencialidad, la integridad y la disponibilidad de los datos mediante evaluaciones de riesgos, controles y mejoras continuas.

Al integrar un SGSI alineado con la norma ISO 27001, las organizaciones pueden mapear procesos clave, como la gestión de riesgos y los controles de acceso, directamente con los requisitos de la Ley de IA de la UE. Esta alineación ayuda a cumplir con las expectativas de la Ley en materia de tratamiento de datos, supervisión y mantenimiento de registros. Básicamente, la Ley de IA especifica lo que hay que hacer, mientras que la norma ISO 27001 ofrece una guía sobre cómo hacerlo. Herramientas como ISMS Copilot pueden agilizar este proceso conectando los controles de la norma ISO 27001 con cláusulas específicas de la Ley de IA, proporcionando políticas, plantillas y pruebas de auditoría para abordar de manera eficiente ambas normas.

¿Cuál es la diferencia entre el cumplimiento obligatorio y el voluntario en la gobernanza de datos?

El cumplimiento obligatorio, como la Ley de IA de la UE, obliga a las organizaciones a seguir normas estrictas y legalmente vinculantes en materia de gobernanza de datos. Esto significa que deben establecer procedimientos de gestión de riesgos, garantizar la calidad de los datos y mantener registros exhaustivos. El incumplimiento de estos requisitos puede dar lugar a multas cuantiosas o incluso a la pérdida de acceso a determinados mercados.

Por otro lado, el cumplimiento voluntario, como la norma ISO 27001, ofrece un enfoque diferente. Aunque no es obligatorio por ley, implica adoptar las mejores prácticas a través de un Sistema de Gestión de Seguridad de la Información (SGSI). Las organizaciones suelen optar por esta vía para mejorar su reputación, reforzar las medidas de seguridad y obtener certificaciones. Sin embargo, no hay consecuencias legales por no cumplirla.

Las principales diferencias se reducen a la aplicación legal frente a la participación opcional, las sanciones reglamentarias frente a las ventajas reputacionales y los mandatos estrictos frente a los marcos adaptables y personalizados.

¿Cómo pueden las organizaciones incorporar requisitos específicos de IA en sus marcos ISO 27001?

Para incorporar las necesidades específicas de la IA en un marco ISO 27001, comience por ampliar su proceso de evaluación de riesgos para incluir retos relacionados con la IA, como la deriva de modelos, el sesgo de datos y el acceso no autorizado a modelos. Relacione estos riesgos con los controles ISO 27001 aplicables, como la gestión de cambios, la gestión de accesos privilegiados y las relaciones con los proveedores. Este enfoque garantiza que los riesgos de la IA se aborden dentro de la estructura existente de su Sistema de Gestión de Seguridad de la Información (SGSI).

Además, adapta tus políticas a la Ley de IA de la UE, centrándote en sus requisitos de gobernanza de datos. Integra prácticas como controles de calidad de datos, seguimiento de procedencia y límites de retención en tus procedimientos ISMS. Estas actualizaciones pueden formalizarse como políticas de «gobernanza de datos de IA», complementando tus controles actuales para la clasificación y el manejo de datos.

Para una estrategia más organizada, podría considerar superponer la norma ISO/IEC 42001 (la norma del sistema de gestión de la IA) a la norma ISO 27001. Esto crea un marco cohesionado para gestionar tanto la IA como la seguridad de la información. Herramientas como ISMS Copilot pueden facilitar este proceso automatizando la identificación de riesgos, proporcionando plantillas y optimizando la documentación, lo que le permite cumplir con mayor eficacia las normas de IA y de seguridad de la información.