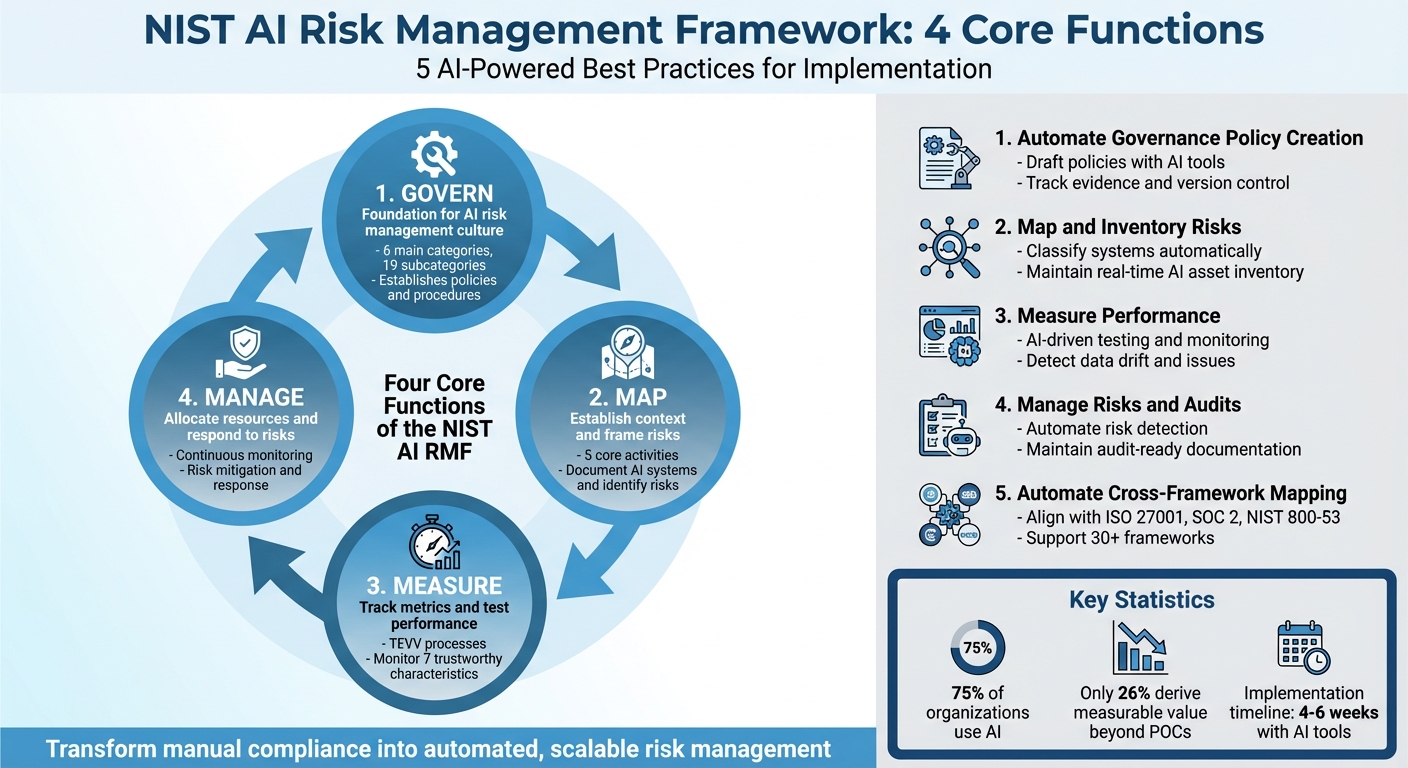

Le cadre de gestion des risques liés à l'IA du NIST (AI RMF 1.0), lancé en janvier 2023, fournit une méthode structurée pour traiter les risques propres aux systèmes d'IA, tels que la dérive des données, l'opacité des modèles et les biais. Il repose sur quatre fonctions essentielles : gouverner, cartographier, mesurer et gérer, qui aident les organisations à identifier, surveiller et atténuer efficacement les risques liés à l'IA.

Voici cinq pratiques basées sur l'IA pour simplifier la mise en œuvre du cadre NIST :

- Automatisez la création des politiques de gouvernance: utilisez des outils d'IA pour rédiger des politiques, suivre les preuves et gérer le contrôle des versions à des fins de conformité.

- Cartographier et inventorier les risques: exploiter l'IA pour classer les systèmes, documenter les risques et tenir à jour un inventaire des actifs liés à l'IA.

- Mesurer les performances: mettre en œuvre des tests et une surveillance basés sur l'IA pour suivre les indicateurs, détecter les problèmes tels que la dérive des données et garantir une fiabilité continue.

- Gérer les risques et les audits: automatisez la détection des risques, hiérarchisez les réponses et conservez une documentation prête pour les audits.

- Automatisez la mise en correspondance entre les cadres: utilisez des outils d'IA pour aligner le NIST AI RMF sur d'autres normes telles que ISO 27001 et SOC 2 afin d'accélérer la mise en conformité.

Ces approches réduisent les efforts manuels, améliorent la précision et favorisent la conformité continue, transformant ainsi la gestion des risques liés à l'IA en un processus rationalisé.

Cadre de gestion des risques liés à l'IA du NIST : 4 fonctions essentielles et processus de mise en œuvre

1. Automatiser la génération des politiques de gouvernance grâce à l'IA

Alignement avec les fonctions essentielles du NIST

La fonction « Govern » (Gouverner) joue un rôle central dans le cadre de gestion des risques liés à l'IA (AI RMF) du NIST, servant de base aux fonctions « Map » (Cartographier), « Measure » (Mesurer) et « Manage » (Gérer). Elle comprend six catégories principales et 19 sous-catégories, toutes visant à favoriser une culture de gestion des risques au sein des organisations. Les outils basés sur l'IA peuvent simplifier la création de documents de gouvernance essentiels, couvrant des tâches telles que la cartographie des exigences légales et réglementaires (Govern 1.1), l'établissement de politiques claires de gestion des risques (Govern 1.4) et la tenue d'inventaires précis des systèmes d'IA (Govern 1.6).

« La gouvernance est conçue comme une fonction transversale qui informe et imprègne les trois autres fonctions. » - NIST AI RMF 1.0

Cette approche fondamentale permet aux outils d'IA de rationaliser efficacement ces tâches de gouvernance.

Utilisation de l'IA pour l'automatisation et l'efficacité

Les outils d'IA apportent évolutivité et efficacité aux processus de gouvernance qui étaient autrefois manuels et chronophages. Le guide NIST AI RMF Playbook, disponible aux formats CSV, JSON et Excel, fournit des sous-catégories structurées qui servent de repères détaillés pour la création de politiques basées sur l'IA. Par exemple, des outils tels que ISMS Copilot peuvent utiliser ces données structurées pour rédiger des politiques de gouvernance conformes aux normes du NIST tout en garantissant l'exactitude réglementaire.

Certaines organisations ont déjà démontré leur succès en intégrant l'IA dans leurs processus de gouvernance. En cartographiant les contrôles RMF de l'IA du NIST et en automatisant le suivi des preuves, elles ont pu mettre en œuvre des solutions d'IA conformes et adaptées à leurs activités en quelques semaines seulement. Cette approche rationalisée réduit la complexité et facilite la mise en conformité.

Mise en œuvre concrète pour la conformité

Pour tirer parti de ces gains d'efficacité, les organisations peuvent utiliser l'IA pour automatiser la création de politiques de gouvernance conformes aux normes du NIST. Commencez par intégrer les mesures suggérées dans le guide NIST AI RMF Playbook dans des outils de rédaction basés sur l'IA afin de générer des politiques détaillées. Pour des tâches telles que l'évaluation des risques liés aux tiers (Govern 6.1), l'IA peut analyser automatiquement les politiques des fournisseurs en matière de logiciels et de données, en traitant des questions telles que la propriété intellectuelle et les risques liés à la chaîne d'approvisionnement.

De plus, le contrôle automatisé des versions peut aider à répondre aux exigences en matière de test, d'évaluation, de vérification et de validation (TEVV). L'IA garantit la cohérence de tous les documents de gouvernance tout en les adaptant au profil de risque de votre organisation. Cette approche transforme les projets d'IA, qui passent du statut d'expériences incertaines à celui de solutions commerciales fiables, évolutives et conformes, en instaurant la confiance et la transparence à chaque étape du cycle de vie du développement.

2. Cartographier et inventorier les risques grâce à l'IA

Alignement avec les fonctions essentielles du NIST

La fonction « Cartographier » est essentielle pour comprendre les risques liés à l'IA, car elle jette les bases des fonctions « Mesurer » et « Gérer ». Sans ce contexte fondamental, il devient difficile de gérer efficacement les risques. La fonction Cartographier se divise en cinq activités principales dans lesquelles l'IA peut apporter un gain d'efficacité : documenter le contexte des systèmes d'IA (Cartographie 1), classer les systèmes par type de tâche (Cartographie 2), analyser leurs capacités (Cartographie 3), identifier les risques spécifiques des composants (Cartographie 4) et évaluer les impacts potentiels (Cartographie 5).

« La fonction de cartographie établit le contexte permettant de définir les risques liés à un système d'IA. » – NIST AI RMF 1.0

Cette fonction est étroitement liée à la recommandation n° 1.6, qui préconise l'utilisation d'outils automatisés pour inventorier les systèmes d'IA et allouer les ressources en fonction des priorités en matière de risques. Elle tient également compte de la nature socio-technique de l'IA. Combinés, ces efforts rendent l'identification et la cartographie des risques plus efficaces et plus continues.

Utilisation de l'IA pour l'automatisation et l'efficacité

L'IA peut simplifier et améliorer la catégorisation et l'identification des risques. Elle peut, par exemple, classer les systèmes (tels que les recommandateurs, les générateurs ou les classificateurs (carte 2.1)) afin de mieux cerner les risques. Des outils automatisés peuvent également analyser les composants tiers afin d'évaluer les risques technologiques et juridiques (carte 4.1). Des outils tels que ISMS Copilot sont particulièrement utiles pour maintenir un inventaire dynamique des systèmes d'IA, y compris les intégrations externes, tout en documentant les limites du système et la nécessité d'une supervision humaine (carte 2.2). L'analyse des données joue un rôle clé dans l'analyse des données historiques et des rapports d'incidents, en aidant à estimer la probabilité et la gravité des dommages potentiels. Cette automatisation transforme ce qui était autrefois une tâche manuelle et fastidieuse en un processus rationalisé et continu. En cartographiant en permanence les risques, les organisations peuvent mieux aligner leurs efforts de gouvernance sur une compréhension claire du paysage des risques.

Mise en œuvre concrète pour la conformité

Pour mettre ces stratégies en œuvre, commencez par utiliser des outils de découverte IA afin de documenter les tâches, les méthodes et les limites de vos systèmes IA (cartes 2.1-2.2). Constituez un inventaire en temps réel qui permette d'effectuer des requêtes de haut niveau telles que « Combien d'utilisateurs sont concernés ? » ou « Quand ce modèle a-t-il été mis à jour pour la dernière fois ? ». Cet inventaire doit contenir des informations essentielles telles que la documentation du système, les dictionnaires de données, le code source, les dates de mise à jour des modèles et les noms des principales parties prenantes. Des outils d'analyse automatisés peuvent ensuite cartographier en continu les risques liés à tous les composants, y compris les logiciels et les données tiers, afin de s'assurer qu'ils respectent les seuils de risque de votre organisation. L'analyse des données permet d'évaluer plus précisément l'ampleur des risques en se basant sur les incidents passés. Les systèmes d'IA évoluant au fil du temps, ce processus de cartographie doit rester dynamique et s'adapter aux changements de contexte, de capacités et de risques tout au long du cycle de vie de l'IA.

3. Mesurer les performances à l'aide de mesures et de tests basés sur l'IA

Alignement avec les fonctions essentielles du NIST

La fonction « Mesurer » joue un rôle essentiel dans les tests de performance en exploitant des indicateurs spécifiques identifiés lors de la phase « Cartographier ». Ces indicateurs guident les décisions liées à la gestion des risques et à la conformité. Les données collectées alimentent la fonction « Gérer », qui déclenche des actions telles que le recalibrage des modèles, la prise en compte des impacts potentiels, voire le retrait des systèmes qui ne répondent plus aux normes.

« Les mesures fournissent une base traçable pour éclairer les décisions de gestion. Les options peuvent inclure le recalibrage, l'atténuation des impacts ou le retrait du système de la conception, du développement, de la production ou de l'utilisation. » – NIST AI RMF Core

Ce cadre garantit que la mesure n'est pas une tâche ponctuelle, mais un processus continu intégré tout au long du cycle de vie de l'IA.

Utilisation de l'IA pour l'automatisation et l'efficacité

Les outils basés sur l'IA simplifient et rationalisent les processus TEVV (test, évaluation, vérification et validation), réduisant ainsi le travail manuel tout en garantissant des méthodes de test cohérentes et évolutives. Ces outils permettent aux organisations de surveiller les indicateurs clés avant le déploiement et pendant le fonctionnement, en restant attentives aux problèmes tels que la dérive, qui se produit lorsque les performances ou la fiabilité d'un système d'IA changent en raison de l'évolution des données. La surveillance en temps réel devient particulièrement vitale dans les applications critiques pour la sécurité, car elle permet de réagir rapidement en cas de défaillance. Par exemple, des outils tels que ISMS Copilot contribuent à garantir le niveau de transparence et de responsabilité exigé par les auditeurs. Une pratique essentielle consiste à maintenir une séparation claire entre les équipes qui développent les modèles d'IA et celles qui sont chargées de les vérifier et de les valider. Cette séparation permet de préserver l'objectivité et favorise la mise en œuvre de stratégies de conformité concrètes.

Mise en œuvre concrète pour la conformité

En s'appuyant sur l'inventaire des risques établi précédemment, les organisations doivent s'attacher à sélectionner des indicateurs qui traitent les risques les plus urgents identifiés lors de la phase de cartographie. Ces indicateurs doivent être conformes aux sept caractéristiques de fiabilité définies par le NIST : validité et fiabilité, sécurité et résilience, responsabilité et transparence, explicabilité et interprétabilité, respect de la vie privée et équité. La surveillance en temps réel et les boucles de rétroaction sont essentielles pour identifier les problèmes de performance ou les dérives de risque, tandis que les commentaires des utilisateurs peuvent affiner davantage les évaluations en cours. Il est tout aussi important de documenter tous les processus de test, y compris les risques difficiles à quantifier. Pour garantir l'impartialité, faites appel à des évaluateurs indépendants qui n'ont pas participé au processus de développement. Enfin, les indicateurs doivent tenir compte des aspects socio-techniques de l'IA, en considérant la manière dont différents groupes peuvent être affectés, même s'ils ne sont pas des utilisateurs directs.

4. Gérer les risques et les audits grâce à l'IA

Alignement avec les fonctions essentielles du NIST

La fonction « Gérer » est la dernière étape du cadre RMF de l'IA du NIST, dans laquelle les organisations traitent activement les risques identifiés lors des étapes précédentes « Cartographier » et « Mesurer ». La gestion des risques n'est pas une tâche ponctuelle : elle nécessite une attention constante et une allocation cohérente des ressources, comme le prévoient les directives de gouvernance. Cette fonction sert de pont entre les étapes précédentes d'identification et d'évaluation des risques et le contrôle opérationnel pratique.

« La fonction GÉRER consiste à allouer régulièrement des ressources de gestion des risques aux risques cartographiés et mesurés, conformément à la définition de la fonction GOUVERNER. » – NIST AI RMF 1.0

Fondamentalement, une gestion efficace des risques implique de prendre des décisions cruciales : poursuivre le déploiement, atténuer les risques potentiels ou suspendre complètement les opérations si les risques dépassent les niveaux acceptables. Comme l'explique le NIST, « dans les cas où un système d'IA présente des niveaux de risque négatifs inacceptables... le développement et le déploiement doivent être suspendus de manière sécurisée jusqu'à ce que les risques puissent être suffisamment maîtrisés ».

Utilisation de l'IA pour l'automatisation et l'efficacité

Les outils d'IA transforment la gestion des risques, qui était auparavant un processus manuel et lent, en une opération continue et en temps réel. Ces outils excellent dans la détection des problèmes de performance et des comportements inattendus qui pourraient échapper à la surveillance humaine, en particulier dans les systèmes complexes impliquant des composants tiers tels que des modèles pré-entraînés. Souvent, les risques latents dans ces modèles n'apparaissent qu'une fois qu'ils sont utilisés.

Les systèmes de surveillance automatisés permettent de réagir plus rapidement en cas de problème. Par exemple, l'IA peut immédiatement signaler toute activité inhabituelle et déclencher des protocoles pour arrêter les systèmes qui fonctionnent en dehors des paramètres prévus. Des outils tels que ISMS Copilot simplifient également la documentation, facilitant ainsi le suivi et la gestion des risques tout au long du processus.

Mise en œuvre concrète pour la conformité

Une fois que les systèmes automatisés ont identifié les risques, l'étape suivante consiste à agir. Commencez par classer les risques par ordre de priorité en fonction de leur probabilité et de leur impact potentiel, tels qu'ils ont été identifiés lors des phases de cartographie et de mesure. Pour les risques hautement prioritaires, élaborez des plans d'intervention clairs qui décrivent comment les atténuer, les transférer, les éviter ou les accepter. Si nécessaire, mettez en place des « coupe-circuits » automatisés pour désactiver les systèmes qui dépassent les niveaux de risque acceptables.

Il est tout aussi important de documenter les risques résiduels à des fins d'audit et d'étendre la surveillance aux composants tiers afin de s'assurer qu'il ne reste aucun angle mort. Enfin, mettez en place des processus post-déploiement pour recueillir des commentaires et des données sur le terrain, ce qui vous aidera à faire face à tout risque imprévu qui pourrait survenir au fil du temps. Ce cycle continu de surveillance et d'action garantit la conformité et permet aux systèmes de fonctionner dans des limites sûres.

sbb-itb-4566332

5. Automatiser le mappage inter-cadres grâce à l'IA

Alignement avec les fonctions essentielles du NIST

La mise en correspondance entre les cadres joue un rôle crucial dans l'intégration du cadre de gestion des risques liés à l'IA (AI RMF) du NIST avec d'autres normes. Voici comment elle s'aligne sur les fonctions essentielles du NIST :

- Gouverner: Établit les politiques et procédures de base.

- Carte: identifie les risques juridiques et technologiques qui se chevauchent (par exemple, carte 4.1).

- Mesure: élabore des indicateurs pour faciliter les audits et les évaluations.

- Gérer: guide les réponses aux risques selon diverses normes de conformité.

« Le cadre vise à s'appuyer sur les efforts déployés par d'autres en matière de gestion des risques liés à l'IA, à s'aligner sur ceux-ci et à les soutenir. » – NIST

Utilisation de l'IA pour rationaliser le processus

La mise en correspondance manuelle de cadres tels que NIST AI RMF, ISO 27001 et SOC 2 est fastidieuse et source d'erreurs. L'IA simplifie ce processus en exploitant l'analyse sémantique automatisée pour détecter les contrôles qui se chevauchent. Grâce au manuel AI RMF disponible dans des formats lisibles par machine tels que JSON, CSV et Excel, les outils de gouvernance, de gestion des risques et de conformité (GRC) basés sur l'IA peuvent rapidement aligner ces contrôles afin de traiter les risques spécifiques à l'IA.

Étapes pour une mise en œuvre pratique

Pour mettre en œuvre efficacement le mappage automatisé entre les frameworks :

- Télécharger les ressources: accédez au guide NIST AI RMF Playbook dans des formats structurés et utilisez les tableaux de correspondance NIST comme référence pour les invites basées sur l'IA.

- Centraliser l'inventaire des systèmes: tenir un inventaire centralisé des systèmes d'IA (Govern 1.6), en mettant l'accent sur les systèmes à haut risque ou contenant des données sensibles.

- Tirez parti des outils d'automatisation: utilisez des outils tels que ISMS Copilot pour identifier les contrôles qui se recoupent dans plus de 30 cadres. Cette approche réduit le temps d'évaluation et augmente la précision de la conformité.

L'automatisation du mappage inter-cadres n'est pas une tâche ponctuelle. Il s'agit d'un processus évolutif qui s'adapte à l'évolution des risques, garantissant ainsi aux organisations le maintien d'une stratégie de conformité résiliente et efficace.

Mise en œuvre du cadre RMF du NIST pour l'IA : une feuille de route vers une IA responsable

Conclusion

L'adoption du cadre de gestion des risques liés à l'IA du NIST ne doit pas nécessairement être une tâche insurmontable. En tirant parti des cinq meilleures pratiques basées sur l'IA évoquées, telles que la création automatisée de politiques et la cartographie inter-cadres, les organisations peuvent transformer ce qui était autrefois un processus manuel fastidieux en un processus efficace et évolutif. Avec les outils et le soutien appropriés, il est possible de mettre en œuvre les piliers fondamentaux du cadre en seulement 4 à 6 semaines.

Voici la réalité : alors que plus de 75 % des organisations utilisent l'IA, seules 26 % parviennent à en tirer une valeur mesurable au-delà des preuves de concept. C'est là qu'interviennent les outils de conformité basés sur l'IA. Ils simplifient l'exécution, rendant l'adoption du cadre plus cohérente, traçable et reproductible.

L'importance de ce changement est parfaitement résumée par Akash Lomas, technologue chez Net Solutions:

« Gérer l'IA de manière responsable n'est pas seulement une mesure de protection, mais aussi un facteur de croissance. » – Akash Lomas

Les plateformes telles qu'ISMS Copilot, qui prennent en charge plus de 30 référentiels, dont NIST 800-53, ISO 27001 et SOC2, changent la donne. Elles automatisent la cartographie des contrôles, génèrent des documents de conformité et fournissent des informations en temps réel sur votre statut de conformité. Cela signifie que les risques peuvent être immédiatement signalés et que les organisations peuvent se maintenir en permanence en état de préparation à l'audit.

La transition d'une gouvernance manuelle et réactive vers une conformité proactive et automatisée ne vise pas seulement à gagner du temps. Il s'agit de gagner la confiance des régulateurs, des clients et des parties prenantes, tout en transformant l'IA d'une entreprise risquée en un avantage stratégique. Que vous harmonisiez plusieurs cadres ou que vous vous concentriez sur des mesures de conformité spécifiques, les solutions basées sur l'IA rendent le processus non seulement gérable, mais aussi durable.

Foire aux questions

Comment l'IA peut-elle simplifier la création de politiques de gouvernance pour le cadre de cybersécurité du NIST ?

L'IA simplifie l'élaboration des politiques de gouvernance pour le cadre de cybersécurité du NIST en transformant les processus manuels fastidieux en workflows rationalisés et automatisés. Elle peut examiner les politiques de sécurité, les évaluations des risques et les inventaires d'actifs existants, puis les aligner sur les fonctions essentielles du NIST ( identifier, protéger, détecter, réagir, récupérer ) et leurs sous-catégories. Grâce à la génération de langage naturel, l'IA peut produire des déclarations de politique qui correspondent aux contrôles requis, remplir des modèles et même gérer les détails de versionnement, le tout en quelques minutes seulement.

Par exemple, des outils tels que ISMS Copilot permettent aux organisations de demander des politiques personnalisées, telles qu'une politique de classification des données conforme aux normes NIST. Ces outils fournissent des documents prêts à être examinés qui intègrent les dernières mises à jour du cadre et les besoins spécifiques de l'organisation. Cette automatisation réduit non seulement les erreurs humaines, mais accélère également le processus d'approbation des politiques et garantit la mise à jour des documents de conformité, ce qui permet aux équipes de se concentrer sur des initiatives plus stratégiques.

Comment l'IA aide-t-elle à identifier et à gérer les risques dans les systèmes d'IA ?

L'IA transforme la manière dont les organisations identifient et gèrent les risques en automatisant le processus de création et de maintenance d'un inventaire des systèmes d'IA, des ensembles de données, des modèles et de leurs dépendances. Cette approche est directement liée au cadre de gestion des risques liés à l'IA (AI RMF) du NIST, qui souligne la nécessité de cartographier et de gérer efficacement les risques liés à l'IA. Grâce aux outils basés sur l'IA, des tâches telles que le suivi des versions des modèles, la traçabilité de l'origine des données et la détection des anomalies sont rationalisées, ce qui réduit le travail manuel et met en évidence des risques qui pourraient autrement passer inaperçus.

Prenons l'exemple d'ISMS Copilot, souvent appelé le « ChatGPT de la norme ISO 27001 ». Il applique ces principes à des cadres tels que NIST 800-53 en analysant les données de configuration, les référentiels de code et les services cloud afin de produire une cartographie complète des risques. Cela permet aux organisations d'identifier rapidement les lacunes en matière de conformité et de déterminer les contrôles nécessaires tout en tenant leur inventaire à jour. En convertissant des données techniques complexes en un langage standardisé de gestion des risques, ISMS Copilot simplifie le processus d'alignement sur le NIST AI RMF, le rendant ainsi beaucoup plus facile à gérer.

Comment les outils d'IA peuvent-ils simplifier la conformité à des référentiels tels que NIST et ISO 27001 ?

Les outils d'IA ont simplifié la tâche traditionnellement complexe et fastidieuse qui consiste à respecter les exigences de conformité de référentiels tels que NIST et ISO 27001. Ces outils analysent les ensembles de contrôles et les référentiels afin de cartographier automatiquement les liens entre les normes, telles que NIST 800-53 et ISO 27001, permettant ainsi aux organisations d'identifier les lacunes, de hiérarchiser les corrections et de réutiliser les preuves dans plusieurs référentiels. Cette approche peut réduire considérablement le temps et les efforts nécessaires pour assurer la conformité.

Au-delà de la cartographie, l'IA peut créer des politiques personnalisées, remplir des modèles et générer des documents prêts à être audités, tels que des évaluations des risques ou des journaux de contrôle, avec un minimum d'informations. Certains outils avancés offrent même une surveillance en temps réel, identifiant les écarts et recommandant des mesures correctives pour garantir une conformité continue. Par exemple, ISMS Copilot, souvent appelé le « ChatGPT de la norme ISO 27001 », se concentre sur ces tâches. Il agit comme un assistant alimenté par l'IA, aidant les professionnels de la conformité à s'aligner sur plusieurs cadres tout en réduisant les coûts et le travail manuel.